Audio Compression Technology: Definition and Principles

Audio compression technology refers to the process of reducing the size of digital audio files without significantly affecting the perceived quality. This is achieved through advanced digital signal processing techniques that eliminate redundant or less perceptible information from the original audio stream, typically in PCM format.

Compression coding involves encoding the audio data in a more efficient way, while decompression or decoding reverses the process to restore the original file. However, during this process, some noise or distortion may be introduced, depending on the type of compression used.

There are two main types of audio compression: lossy and lossless. Lossy compression, such as MP3, WMA, and OGG, reduces file size by discarding some data, which can lead to a slight decrease in audio quality. On the other hand, lossless compression, like APE, FLAC, and WavPack, retains all the original data, allowing for perfect reconstruction of the source file. These formats are commonly used when high fidelity is essential.

Audio compression technology refers to the process of reducing the size of digital audio files without significantly affecting the perceived quality. This is achieved through advanced digital signal processing techniques that eliminate redundant or less perceptible information from the original audio stream, typically in PCM format.

Compression coding involves encoding the audio data in a more efficient way, while decompression or decoding reverses the process to restore the original file. However, during this process, some noise or distortion may be introduced, depending on the type of compression used.

There are two main types of audio compression: lossy and lossless. Lossy compression, such as MP3, WMA, and OGG, reduces file size by discarding some data, which can lead to a slight decrease in audio quality. On the other hand, lossless compression, like APE, FLAC, and WavPack, retains all the original data, allowing for perfect reconstruction of the source file. These formats are commonly used when high fidelity is essential.

**1. Digital Audio Features**

The quality of digital audio depends on two key parameters: sampling frequency and quantization bits. A higher sampling frequency captures more details over time, while a higher bit depth provides more precise amplitude representation. However, these factors also increase the file size and demand greater storage and transmission capacity.

For example, with a sampling rate of 44.1 kHz, 16-bit quantization, and stereo channels, the data rate reaches approximately 1.4 Mbps, which translates to about 1.7 MB per second. This large volume of data poses challenges for storage and transmission, making compression essential.

Audio compression aims to reduce the data rate while maintaining acceptable sound quality. It works by analyzing the original signal and removing components that are not easily perceptible to the human ear, thus minimizing the amount of data needed for encoding.

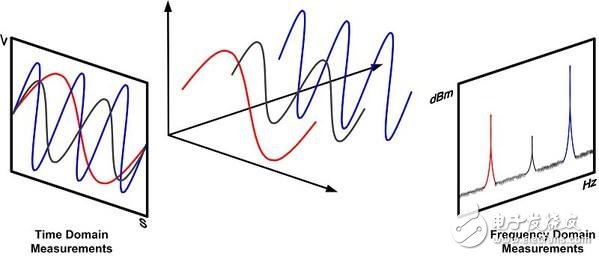

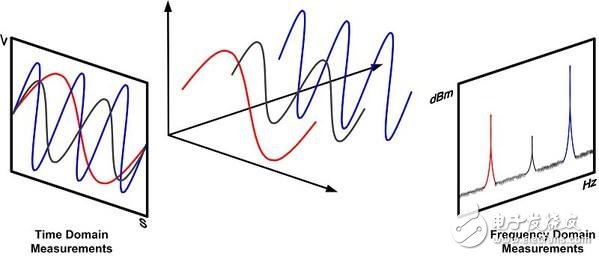

**2. Time Domain Redundancy**

Time domain redundancy refers to patterns in the audio signal that can be exploited for compression:

1. **Non-uniform Amplitude Distribution**: Not all parts of the signal use the full dynamic range, so some bits are wasted.

2. **Sample Correlation**: Adjacent samples are often similar, allowing for efficient prediction and encoding.

3. **Periodic Correlation**: Some sounds repeat over time, creating predictable patterns.

4. **Long-term Self-correlation**: Over longer intervals, the signal maintains a stable structure.

5. **Silence**: Periods of silence can be removed or compressed to save space.

**1. Digital Audio Features**

The quality of digital audio depends on two key parameters: sampling frequency and quantization bits. A higher sampling frequency captures more details over time, while a higher bit depth provides more precise amplitude representation. However, these factors also increase the file size and demand greater storage and transmission capacity.

For example, with a sampling rate of 44.1 kHz, 16-bit quantization, and stereo channels, the data rate reaches approximately 1.4 Mbps, which translates to about 1.7 MB per second. This large volume of data poses challenges for storage and transmission, making compression essential.

Audio compression aims to reduce the data rate while maintaining acceptable sound quality. It works by analyzing the original signal and removing components that are not easily perceptible to the human ear, thus minimizing the amount of data needed for encoding.

**2. Time Domain Redundancy**

Time domain redundancy refers to patterns in the audio signal that can be exploited for compression:

1. **Non-uniform Amplitude Distribution**: Not all parts of the signal use the full dynamic range, so some bits are wasted.

2. **Sample Correlation**: Adjacent samples are often similar, allowing for efficient prediction and encoding.

3. **Periodic Correlation**: Some sounds repeat over time, creating predictable patterns.

4. **Long-term Self-correlation**: Over longer intervals, the signal maintains a stable structure.

5. **Silence**: Periods of silence can be removed or compressed to save space.

**3. Frequency Domain Redundancy**

Frequency domain redundancy arises from the uneven distribution of energy across different frequencies:

1. **Non-uniform Power Spectral Density**: Low-frequency regions often have more energy than high-frequency ones, creating opportunities for compression.

2. **Speech-Specific Patterns**: Speech signals have formants at certain frequencies, which can be efficiently represented.

**4. Auditory Redundancy**

Human hearing has limitations in both frequency and time resolution. Based on psychoacoustic models, certain parts of the audio signal can be masked and removed without affecting perception. This allows for further reduction in data rates, enabling more efficient audio transmission and storage.

By leveraging these redundancies, audio compression technology plays a crucial role in modern digital media, ensuring high-quality audio while keeping file sizes manageable.

**3. Frequency Domain Redundancy**

Frequency domain redundancy arises from the uneven distribution of energy across different frequencies:

1. **Non-uniform Power Spectral Density**: Low-frequency regions often have more energy than high-frequency ones, creating opportunities for compression.

2. **Speech-Specific Patterns**: Speech signals have formants at certain frequencies, which can be efficiently represented.

**4. Auditory Redundancy**

Human hearing has limitations in both frequency and time resolution. Based on psychoacoustic models, certain parts of the audio signal can be masked and removed without affecting perception. This allows for further reduction in data rates, enabling more efficient audio transmission and storage.

By leveraging these redundancies, audio compression technology plays a crucial role in modern digital media, ensuring high-quality audio while keeping file sizes manageable.

Portable Splicer Machine,Mini Fusion Splicer,Fiber Splicer,Optical Splicer

Guangdong Tumtec Communication Technology Co., Ltd , https://www.gdtumtec.com